Get Queries History

Overview

Within Indexima, query history can be saved into a CSV file to be later loaded into Indexima for analysis.

Define queries history storage place

In galactica.conf, you can set the parameter history.export to the absolute path you want to store your query history into. Eg. history.export = /var/log/indexima/history_csv

We strongly recommend that you set history.export to a different path (a sub-path is fine) than history.dir, so that the different types of log don't get mixed up.

We recommend you to define a dédicated directory in your warehouse. Thus you would be able to import those files into an Indexima table through a LOAD command.

If you want to store your history files in AWS S3, you must write the full S3 path prefixed with s3a. Eg. history.export = s3a://my-bucket/path/to/query/logs

Exported CSV files are stored each day in a different file.

Content

Files contain:

- All the queries sent to indexima

- subqueries for queries that contain subqueries. In that case, the Parent column is fulfilled

- To separate subqueries from "original" queries, we recommend creating a calculated column.

CSV format

Column name | Type | Description | Infos |

|---|---|---|---|

OPERATION | string | the SQL operation name | SELECT, LOAD, etc.. |

STATEMENT | string | the SQL statement | |

SUBMIT | integer | Number of submitted tasks | |

FINISH | integer | Number of finished tasks | |

DURATION | bigint | Execution duration | In milliseconds |

TIME | Timestamp YYYY-MM-DD hh:mm:ss | Execution start time | |

ERROR | string | Error if any | |

TABLE | string | Table name | |

IDENTIFIER | bigint | Query identifier | |

KILL | boolean | Request killed ? | True / False |

LOADED | boolean | Index Loaded during request | True / False |

RESULT | bigint | Number of results | |

USER | string | User name | |

IP | string | User IP | |

INDEX | string | Index name | |

CACHED | boolean | Request found in cache | True / False |

HYBRID | boolean | Hybrid request (disk scanning) | True / False |

DELEGATED | boolean | Request delegated to external | True / False |

EXTERNAL_TABLE_NAME | string | External table name | if delegated |

EXTERNAL_ERROR | string | External error if any | if delegated |

EXTERNAL_DURATION | bigint | Total duration of a delegated query | if delegated |

EXTERNAL_NB_ROWS_FETCHED | bigint | External number of rows fetched | if delegated |

EXTERNAL_QUERY_EXECUTION_DURATION | bigint | Duration of a SELECT query delegated, without downloading the result set | if delegated |

LOAD_TOTAL_SIZE | bigint | Number of lines loaded | LOAD statement only |

LOAD_INSERTS | bigint | Number of lines inserted | LOAD statement only |

LOAD_ERRORS | bigint | Number of lines in error | LOAD statement only |

MAX_WORKER_MEMORY | bigint | Maximum amount of memory (in bytes) used to compute a query (for all nodes, including master) | |

MASTER_MEMORY | bigint | Amount of memory (in bytes) used on the master node to compute a query | |

READ_LINES_FROM_BUCKETS | bigint | Number of lines scanned on disk | |

DISK_USAGE | bigint | Amount of data (in bytes) spilled on disk | |

RESULT_SET_SIZE | bigint | Size (in bytes) of the resultset sent back | |

PENDING_TIME | bigint | Pending duration time (ms) of the query before being executed | Since 1.7.8 |

| PARENT | bigint | Query identifier | since 1.7.12 |

| SUSPENDED | bigint | Total time (ms) while the query was in a freezing state. | since 2021.3 |

| BIG_INDEX | boolean | True is the query used the bigindex feature, false otherwise. | since 2021.3 |

| REQUEST_INITIALIZATION_DURATION | bigint | Initialization time (time to parse the query, if more than 1ms) | since 2022.1 |

Note that this "export history files" is not an official feature. The file format remains internal to the product and may be changed without notice.

Importing your logs in Indexima

Creating a Log Table

You can create the Log Table within your Indexima cluster with the following command Get_History_queries.sql

Note: You can adapt the schema and the table name you want to use

Loading Data

Then, you can load data from the CSV file saved automatically in history.export:

LOAD DATA INPATH '.../history_csv' INTO TABLE logs_table FORMAT CSV SEPARATOR ',' ;

COMMIT logs_table;Note: Adapt the values "logs_table" and "/var/log/indexima/history_csv" to the values that suit your configuration.

If you want to store your history files in AWS S3, use the same s3a path you set up in the parameter history.export.

LOAD DATA LOCAL INPATH 's3a://my-bucket/path/to/query/logs' INTO TABLE logs_table FORMAT CSV SEPARATOR ',';

COMMIT logs_table;Analyzing the queries logs

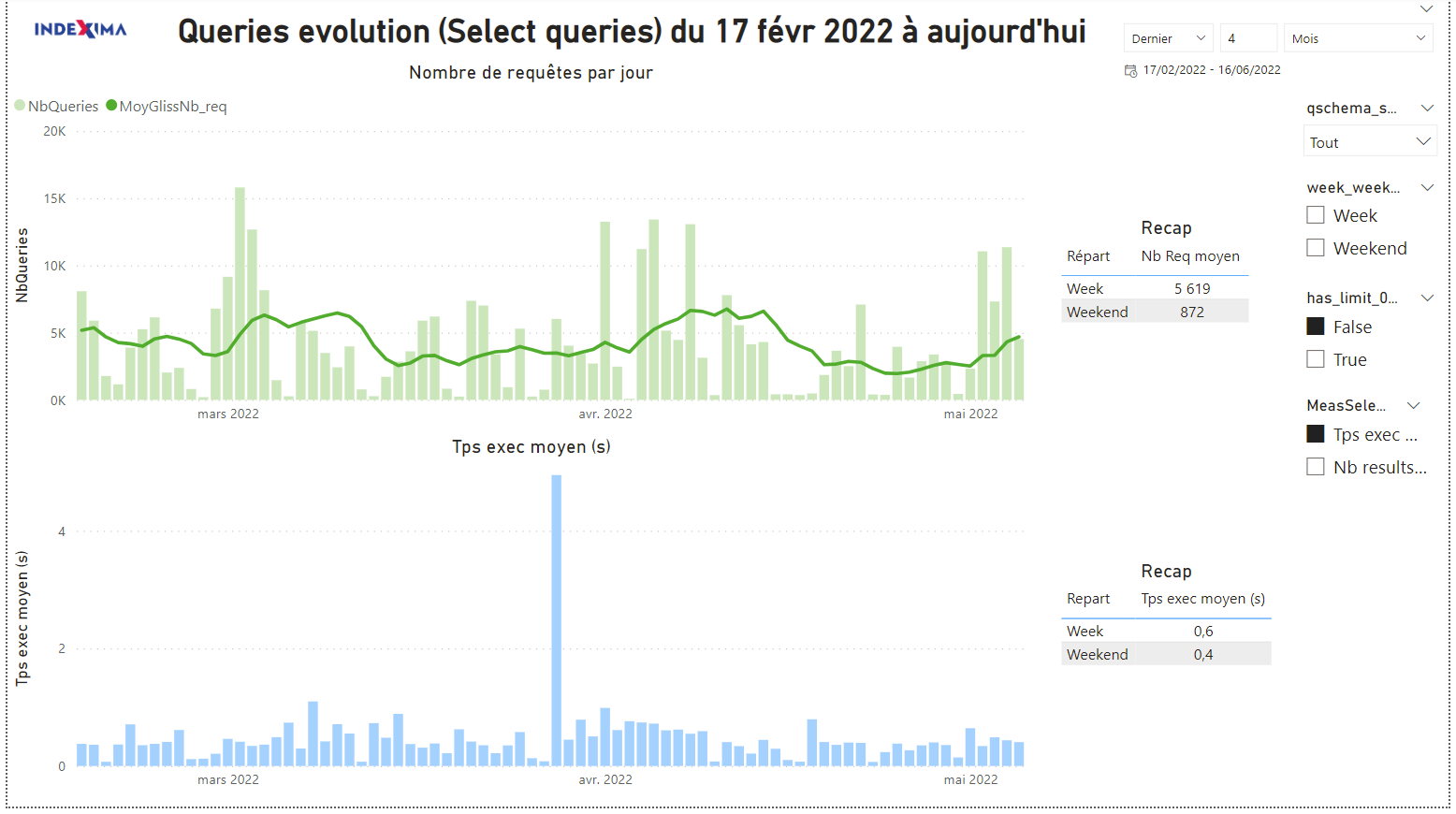

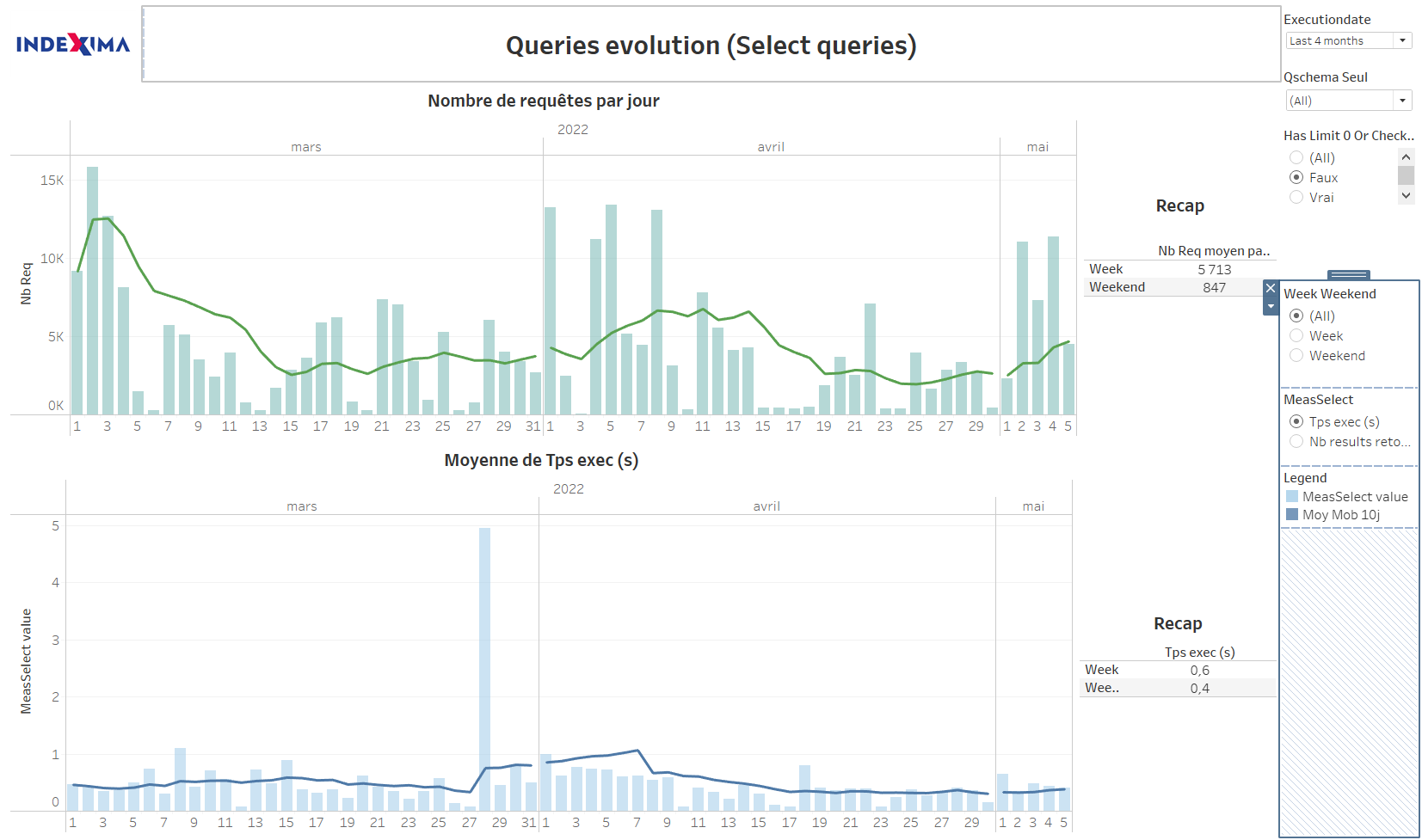

Indexima provides starting documents that anyone is free to modify.

- PowerBI: Suivi_Requetes_PowerBI.pbix

- Tableau: Suivi_Requetes_Tableau.twbx